This is going to be more a discussion / wordy thing; no real code here. This is just to wander through some points, and maybe you’ll find them interesting or thought provoking. You have been warned.

At Stack Exchange, we have a fair understanding of technical debt. Like real debt, technical debt is not by necessity a bad thing – it can allow you to choose an acceptable (but not ideal) solution today, which means you can ship today, but you know that at some point you are going to have to revisit it. Like all loans, technical debt carries interest.

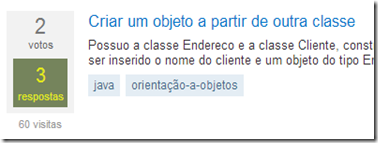

Today I’m talking about tags; specifically, the tags on Stack Exchange questions. These things:

I’m going to run through a lot of back-story, but if you’re lazy, just pretend you read all of it and skip to the last paragraph. Or, heck, go look at cat videos. I won’t tell.

Step 0 : the original problem

Way way back (long before I joined the company), the then-much-smaller team needed a way to store and query tags – in particular a wide range of “{a} and {b} and {c}”, “{d} or {e}”, “{f} but not {y}” etc queries for the “show me the questions in {some tags}” pages, or the search page (which allows a lot of tag-based filtering). Your classic database approach here might be to have a Posts table, and Tags table, and a PostTags table, and do a lot of work on PostTags, but as I understand it this simply didn’t want to perform well. Equally, we access and display questions a lot. No, a real lot. Huge amounts. The one thing that we want to happen really really efficiently is “fetch a question”.

Having that data spread over 3 tables requires complex joins or multiple queries (because you could be fetching 500 questions, which could each have 1-5 tags), and then processing the more complicated data. Because of this, we store the tags as a single character-data field in the database – all the tags in one string. This makes it possible to get the post including all the tags in a single row query, and just worry about deciphering it at the UI. Neat. But it doesn’t really allow for efficient query.

Aside: at this point I should also note that we additionally store the data in a PostTags table (although I can’t remember whether this was the case at the time) – the inline vs normalized data is kept in sync and used for different purposes; the things you do for performance, eh?

Step 1 : the evil/awesome hack

So, we’ve got inline tag data that is simple to display, but is virtually impossible to query. Regular indexing doesn’t really work well at finding matches in the middle of character data. Enter (trumpets) SQL Server Full-Text Search. This is inbuilt to SQL Server (which we were already using), and allows all kinds of complex matching to be done using CONTAINS, FREETEXT, CONTAINSTABLE and FREETEXTTABLE. But there were some problems: stop words and non-word characters (think “c#”, “c++”, etc). For the tags that weren’t stop words and didn’t involve symbols, it worked great. So how to convince Full Text Search to work with these others? Answer: cheat, lie and fake it. At the time, we only allowed ASCII alpha-numerics and a few reserved special characters (+, –, ., #), so it was possible to hijack some non-English characters to replace these 4, and the problem of stop words could be solved by wrapping each tag in a pair of other characters. It looked like gibberish, but we were asking Full Text Search for exact matches only, so frankly it didn’t matter. A set of tags like “.net c#” thus became “éûnetà écñà”. Obviously! This worked; it performed well (remember, Stack Overflow was still very young here), and allowed the team to ship. Shipping is a pretty important feature! The team knew it was a fudge, but all was well (enough) in the world…

Step 2 : the creaks begin

Stack Overflow was a hit. Over time, the question count grows steadily larger, as do the number of page requests. Eventually it became apparent that our flagrant abuse of Full Text Search was becoming a performance bottleneck. To be fair, Full Text Search wasn’t really intended to be used in this way, and we were using it at an alarming rate (even after caching). But by now the team had a viable product, more developers, and enough information to judge that more effort was both necessary and worthwhile. At different points, then, our search efforts were re-written to use Lucene.Net and then Elasticsearch, and the “list by tag” work grew a bespoke entity that we call the “tag engine” (which is actually what this previous post is about) – essentially an out-of-process index service. Nowhere near as fully-featured as (say) Lucene.Net, but it only needs to do one thing.

Step 3 : Stack Exchange has sites about language

In time, we had requests for sites that were still primarily in English, but were about languages; French, Japanese, Russian, etc. A lot of their tags were in English, but there was a need to remove our “ASCII alpha-numerics” restriction. Fortunately, since we had Elasticsearch and the tag-engine, this mainly meant changing the few remaining Full Text Search usages (things that were used rarely, and hadn’t been worth fixing) to use alternatives. The format in the database, however, remained. And since we didn’t want to introduce even more bizarre rules, we kept the 6 reserved characters. Sorry, French.StackExchange – no “é” or “à” in your tags. In reality this was less of a problem than it sounds, as French.StackExchange elects to use accent-free tags – while sites like Russian.StackExchange could use the tags they wanted. And the world keeps turning.

Step 4 : Stack Exchange goes fully multi-lingual (well, ok, bilingual)

Enter Portuguese; in pt.stackoverflow.com, we have our first site that is completely in another language. No weasel room left now: they want tags like this:

And guess what: ç was one of our reserved tokens (representing +, so “c++” was stored as “écççà”). We couldn’t even whistle a happy tune and hope nobody would notice the gaps: they found it on day 1 of closed beta. Maybe day 2. We finally had a reason to remove this legacy from the past. But – and I cannot emphasize this enough: these changes were scary. So scary that we didn’t want to do a “update all the things” until we’d had chance to “bed it in” on pt.StackOverflow, and fix any glitches that we’d missed. As it happened, all the code was making use of a single “decipher the tags” method, so it was sensible and pragmatic to simply make that understand both the old format and whatever we came up with now. After some thought and consideration, we settled on a pipe (bar) delimited natural representation, with leading/trailing pipes, so “.net c#” becomes simply “|.net|c#|”. This has virtues:

- very simple to parse

- we can tell immediately from the first character which format it is

- bulk update and removal of tags can be done with a simple replace (including the pipes, to avoid replacing mid-tag matches)

- and unlike the old format, you don’t need to worry about special-casing the first/last tag when doing a bulk operation (trailing spaces, etc)

Sure, there’s still a reserved |, but we can live with that. We could have used a non-printing character, but that would be very awkward in things like JSON – lots of risk of subtle bugs.

Once this had been working on pt.StackOverflow for a while, we flipped a switch and all new write operations switched to the new format; all “language” sites could have free access to the 6 previously reserved characters. Hoorah!

Step 5 : the backfill

When we did that, we only affected new writes. There was still data using the old format. A lot of data (not just the questions themselves: all tag edits, for example, were stored in this way). But while it remained, our “bulk remove a tag” code had to cope with two formats, which is an unnecessary maintenance overhead. So finally this week we absorbed the pain to do a batched migration of all the old data to the new format. Fairly routine, if a little scary.

And happy dance; the hack is no more!

So why are you telling me all this? What is the point?

Our job as engineers is not always to write the best possible thing that we can, and that can solve every problem ever, and is beautiful code that makes us want to adopt it and take it on picnics. An important part of our job is to:

At the time the original hack was put in, it was absolutely the right choice. A few lines of code, a few clicks in SQL Server, job done. Shipped. Working. It would not have been sensible to invest time getting an external indexing service working just for this. But there was always the possibility to change the implementation, and the “unscramble the mess” code was in one place. Sure: there was debt, but the level of pain was kept to a minimum, and has finally been paid back in full.