Why Doesn't Dapper Use Prepared Statements?

I had a very interesting email in my inbox this week from a Dapper user; I'm not going to duplicate the email here, but it can be boiled down to:

My external security consultant is telling me that Dapper is insecure because it doesn't use prepared statements, and is therefore susceptible to SQL injection. What are your thoughts on this?

with a Dapper-specific example of something comparable to:

List<Order> GetOpenOrders(int customerId) => _connection.Query<Order>(

"select * from Orders where CustomerId=@customerId and Status=@Open",

new { customerId, OrderStatus.Open }).AsList();

Now this is a fun topic for me, because in my head I'm reading it in the same way that I would read:

My car mechanic is telling me my car is dangerous because it doesn't use anti-smear formula screen-wash, and is therefore susceptible to skidding in icy weather. What are your thoughts on this?

Basically, these are two completely unrelated topics. You can have a perfectly good and constructive conversation about either in isolation. There are merits to both discussions. But when you smash them together, it might suggest that the person raising the issue (the "security consultant" in this case, not the person sending me the email) has misunderstood something fundamental.

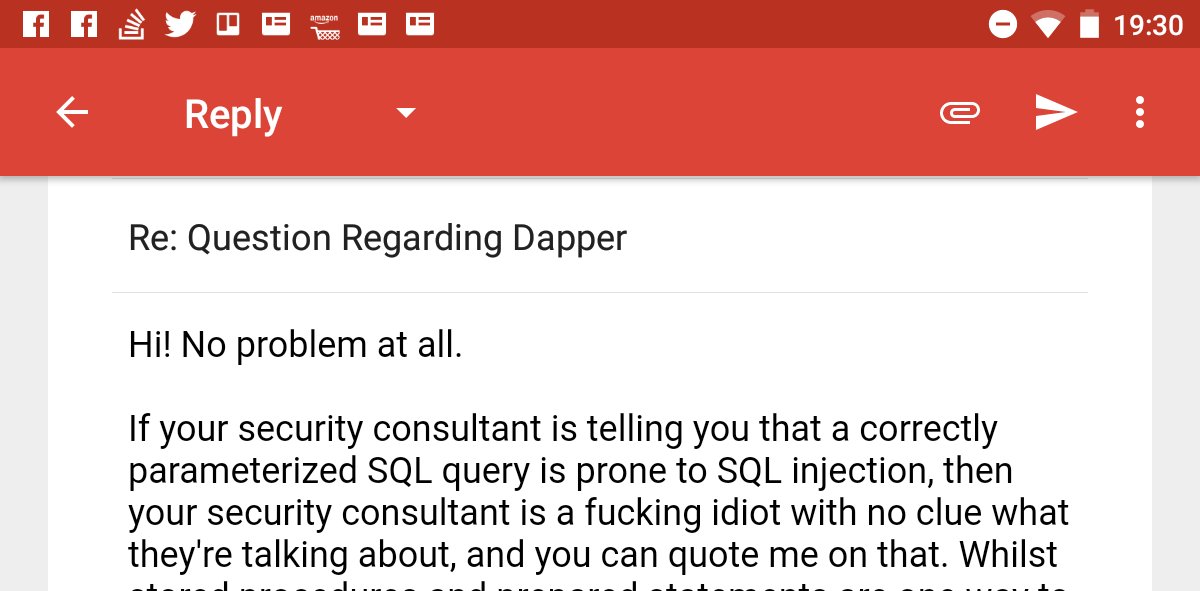

My initial response - while in my opinion valid - probably needs to be expanded upon:

So; let's take this moment to discuss the two topics and try to put this beast to bed!

Part The First: What is SQL injection?

Most folks will be very familiar with this, so I'm not going to cover every nuance, but: SQL injection is the major error made by concatenating inputs into SQL strings. It could be typified by the bad example:

string customerName = customerNameTextBox.Value; // or a http request input; whatever

var badOptionOne = connection.Query<Customer>(

"select * from Customers where Name='" + customerName + "'");

var badOptionTwo = connection.Query<Customer>(

string.Format("select * from Customers where Name='{0}'", customerName));

var badOptionThree = connection.Query<Customer>(

$"select * from Customers where Name='{customerName}'");

As an aside on badOptionThree, it really frustrates me that C# overloading prefers string to FormattableString (interpolated $"..." strings can be assigned to either, but only FormattableString retains the semantics). I would really have loved to be able to add a method to Dapper like:

[Obsolete("No! Bad developer! Bobby Tables will find you in the dark", error: true)]

public static IEnumerable<T> Query<T>(FormattableString query, /* other args not shown */)

=> throw new InvalidOperation(...);

This category of coding error is perpetually on the OWASP "top 10" list, and is now infamously associated with xkcd's "Bobby Tables":

The problem, as the cartoon shows us, is that this allows malicious input to do unexpected and dangerous things. In this case the hack was to use a quote to end a SQL literal (');... - in this case with the attacker guessing that the clause was inside parentheses), then issue a separate command (DROP TABLE ...), then discard anything at the end of the original query using a comment (-- ...). But the issue is not limited to quotes, and frankly any attempt to play "replace/escape the risky tokens" is an arms race where you need to win every time, but the attacker only needs to win once. Don't play that game.

It can also be a huge internationalization problem, familiar to every developer who has received bug reports about the search not working for some people of Irish or Scottish descent. This (SQL injection - not Irish or Scottish people) is such an exploitable problem that readily available tools exist that can trivially seach a site for exploitable inputs and give free access to the database with a friendly UI. So... yeah, you really don't want to have SQL injection bugs. No argument there.

So how do we prevent SQL injection?

The solution to SQL injection is parameters. One complaint I have about the xkcd comic - and a range of other discussions on the topic - is the suggestion that you should "sanitize" your inputs to prevent SQL injection. Nope! Wrong. You "sanitize" your inputs to check that they are within what your logic allows - for example, if the only permitted options from a drop-down are 1, 2 and 3 - then you might want to check that they haven't entered 42. Sanitizing the inputs is not the right solution to SQL injection: parameters are. We already showed an example of parameters in my SQL example at the top, but to take our search example:

string customerName = customerNameTextBox.Value; // or a http request input; whatever

var customers = connection.Query<Customer>(

"select * from Customers where Name=@customerName",

new { customerName });

What this does is add a parameter called "customerName" with the chosen value, passing that alongside and separate to the command text, in a raw form that doesn't need it to be encoded to work inside a SQL string. At no point does the parameter value get written into the SQL as a literal. Well, except perhaps on some DB backends that don't support parameters at all, in which case frankly it is up to the DB provider to get the handling right (and: virtually all RDBMS have first-class support for parameters).

Note that parameters solve other problems too:

- the formatting of things like dates and numbers: if you use injection you need to know the format that the RDBMS expects, which is usually not the format that the "current culture" is going to specify, making it awkward; but by using a parameter, the value doesn't need to be formatted as text at all - with things like numbers and dates usually being sent in a raw binary format - some-defined-endian-fixed-width for integers (including dates), or something like IEEE754 for floating point.

- query-plan re-use: the RDBMS can cache our

...Name=@customerNamequery and re-use the same plan automatically and trivially (without saturating the cache with a different plan for every unique name searched), with different values of the parameter@customerName- this can provide a great performance boost (side note: this can be double-edged, so you should also probably learn aboutOPTIMIZE FOR ... UNKNOWN(or the equivalent on your chosen RDBMS) if you're serious about SQL performance - note this should only be added reactively based on actual performance investigations)

Dapper loves parameters

Parameterization is great; Dapper loves parameterization, and does everything it can to make it easy for you to parameterize your queries. So: whatever criticism you want to throw at Dapper: SQL injection isn't really a fair one. The only time Dapper will be complicit in SQL injection is when you feed it a query that already has an injection bug before Dapper ever sees it. We can't fix stupid.

For full disclosure: there is actually one case where Dapper allows literal injection. Consider our GetOpenOrders query from above. This can also be written:

List<Order> GetOpenOrders(int customerId) => _connection.Query<Order>(

"select * from Orders where CustomerId=@customerId and Status={=Open}",

new { customerId, OrderStatus.Open }).AsList();

Note that instead of @Open we're now using {=Open}. This is not SQL syntax - it is telling Dapper to do an injection of a literal value. This is intended for things that don't change per query such as status codes - and can result in some cases in performance improvements. Dapper doesn't want to make it easy to blow your own feet off, so it STRICTLY only allows this for integers (including enum values, which are fundamentally integers), since integers are a: very common for this scenario, and b: follow predictable rules as literals.

Part The Second: What are prepared statements?

There's often a slight confusion here with "stored procedures", so we'll have to touch on that too...

It is pretty common to issue commands to a RDBMS, where the SQL for those commands is contained in the calling application. This isn't universal - some applications are written with all the SQL in "stored procedures" that are deployed separately to the server, so the only SQL in the application is the names to invoke. There are merits of both approaches, which might include discussions around:

- isolation - the ability to deploy and manage the SQL separately to the application (which might be desirable in client applications in particlar, where re-deploying all the client installations to fix a small SQL bug is hard or expensive)

- performance - historically stored procedures tended to out-perform ad-hoc commands; in most modern RDBMS this simply isn't a real concern, with the query-plan-cache working virtually identically regardless of the mechanism

- granular security - in a high security application you might not want users (even if the "user" is a central app-server) to have direct

SELECTpermission on the tables or views - instead preferring to wrap the allowed queries in stored procedures that the calling user can be grantedEXECpermission; of course a counter-argument there is that a blindEXECcan hide what a stored procedure is doing (so it does something the caller didn't expect), but ultimately if someone has pwned your RDBMS server, you're already toast - flexibility - being able to construct SQL to match a specific scenario (for example: the exact combination of 17 search options) can be important to improving performance (compared, in our search example, to 17

and (@someArg is null or row.SomeCol=@someArg)clauses). Tools like LINQ and ORMs rely extensively on runtime query generation to match the queries and model known at runtime, so allowing them to execute ad-hoc parameterized commands is required; it should also be noted that most RDBMS can also execute ad-hoc parameterzied commands from within SQL - via things likesp_executesqlfrom inside a stored procedure

You'll notice that SQL injection is not part of that discussion on the merits of "ad-hoc commands" vs "stored procedures", because parameterization makes it a non-topic.

So: let's assume that we've had the conversation about stored procedures and we've decided to use ad-hoc statements.

What does it mean to "prepare" a statement?

"Preparing a statement" is a sometimes-optional / sometimes-mandatory (depending on the RDBMS) step required to issue ad-hoc SQL commands. Conceptually, it takes our "select * from Orders where CustomerId=@customerId and Status=@Open" query - along with the defined parameters - and says "I'm going to want to run this in a moment; kindly figure out what that means to you and get everything in place". In terms of ADO.NET, this means calling the DbCommand.Prepare() method. There are 3 possible outcomes of a Prepare() call (ignoring errors):

- it does literally nothing - a no-op; this might commonly be the case if you've told it that you're running a stored procedure (it is already as prepared as it will ever be), or if your chosen RDBMS isn't interested in the concept of prepared statements

- it runs an additional optional operation that it wouldn't have done otherwise - adding a round trip

- it runs a required operation that it was otherwise going to do automatically when we executed the query

So on the surface, the best case is that we achieve no benefit (the first and third options). The worst case is that we've added a round trip. You might be thinking "so why does Prepare() exist, if it is only ever harmful?" - and the reason is: I've only talked about running the operation once.

The main scenario in which Prepare() helps us is when you're going to be issuing exactly the same command (including the parameter definition, but not values), on exactly the same connection, many many times, and especially when your RDBMS requires command preparation. In that scenario, preparing a statement can be a very important performance tweak.

You'll notice - similarly to stored procedures - that SQL injection is not part of that discussion on the merits of "prepared statements".

It is entirely true to say that Dapper does not currently call Prepare().

Why doesn't Dapper Prepare() statements?

There are various reasons for this, but the most important one is: on most providers, a prepared statement is scoped to the connection and is stored as part of the DbCommand. To actually provide a useful prepared statement story, Dapper would need to store and re-use every DbCommand for every DbConnection. Dapper really, really doesn't want to store your connections. It is designed with high concurrency in mind, and typically works in scenarios where the DbConnection is short-lived - perhaps scoped to the context of a single web-request. Note that connection pooling doesn't mean that the underlying connection is short-lived, but Dapper only gets to see the managed DbConnection, so anything else is opaque to it.

Without tracking every DbConnection / DbCommand and without a new abstraction, the best Dapper could do would be to call .Prepare() on every DbCommand immediately before executing it - but this is exactly the situation we discussed previously where the only two options are "has no effect" and "makes things worse".

Actually, there is one scenario using the current API in which Dapper could usefully consider doing this, which is the scenario:

connection.Execute(someSql, someListOfObjects);

In this case, Dapper unrolls someListOfObjects, executing someSql with the parameters from each object in turn - on the same connection. I will acknowledge that a case could be made for Dapper to call .Prepare() in anticipation of the loop here, although it would require some changes to implement.

But fundamentally, the main objection that dapper has to prepared statements is that typically, the connections that Dapper works with are transient and short-lived.

Could Dapper usefully offer a Prepare() API for systems with long-lived connections?

Hypothetically, yes: there is something that Dapper could do here, specifically targeted at the scenario:

I have a long-lived connection and an RDBMS that needs statements to be prepared, and I want the best possible performance when issuing repeated ad-hoc parameterized commands.

We could conceptualize an API that pins a command to a single connection:

var getOrders = connection.Prepare<Order>(

"select * from Orders where CustomerId=@customerId",

new { customerId = 123 }); // dummy args, for type inference

// ...

var orders = getOrders.Query(new { customerId }).AsList();

Note that in this imaginary API the connection is trapped and pinned inside the object that we stored in getOrders. There are some things that would need to be considered - for example, how does this work for literal injection and Dapper's fancy "in" support. A trivial answer might be: just don't support those features when used with .Prepare().

I think there's plenty of merit to have this kind of discussion, and I'm 100% open to discussing API features and additions. As long as we are discussing the right thing - i.e. the "I have a long-lived..." discussion from above.

If, however, we start that conversation (via a security consultant) via:

I want to use prepared statements to avoid SQL injection

then: that is not a useful discussion.

Tl;dr:

If you want to avoid your car skidding in icy weather, you fit appropriate tyres. You don't change the screen-wash.