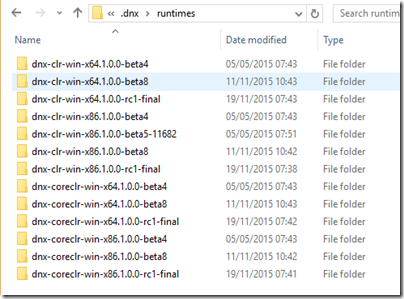

In part 1, we looked at an existing library that we wanted to move to core-clr; we covered the basics of the tools, and made the required changes just to change to the project.json build approach, targeting the same frameworks.

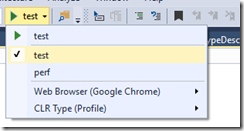

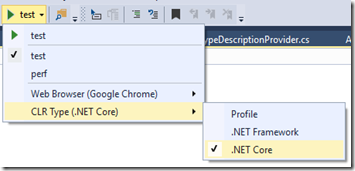

In part 2, we looks at “dnxcore50”, and how to port a library to support this new framework alongside existing .net frameworks. We looked at how to setup and debug tests. We then introduced “dnx451”: the .net framework running inside DNX.

In part 3, we dive deeper still…

Targeting Hell

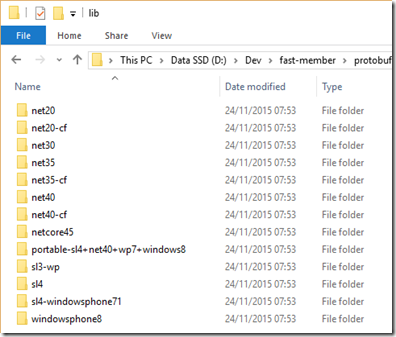

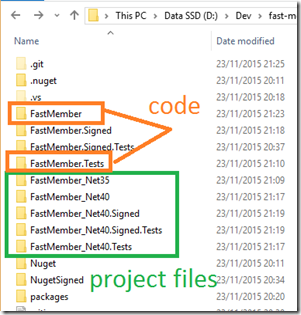

You could well be thinking that all these frameworks (dnx451, dnxcore40, net35, net40, etc) could start to become tedious. And you’d be right! FastMember only targets a few, but as a library author, you may know the …. joy … of targeting a much wider set of .net frameworks. Here’s the build tree for protobuf-net r668 (pre core-clr conversion):

These are incredibly hard to build currently (often requiring per-platform tools). It is a mess. Adding more frameworks isn’t going to make our life any easier. However, many of these frameworks have huge intersections. Rather than having to explicitly target 20 similar frameworks, how about if we could just target an entire flavor of similar APIs? That is what the .NET Platform Standard (aka: netstandard) introduces. This is mainly targeting a lot of the newer frameworks, but then… it is probably about time I dropped support for Silverlight 3. With these new tools, we can increase our target audience very quickly, without overly increasing our development burden.

Important: the names here are moving. At the current time (rc1), the documentation talks about “netstandard1.4” etc; however, the tools recognise “dotnet5.5” to mean the same thing. (edited: I originally said dotnet5.4===netstandard1.4; I had an off-by-one error - the versions are 4.1 off from each-other!) Basically, the community made it clear that “dotnet” was too confusing for this purpose, so the architects wisely changed the nomenclature. So “netstandard1.1” === “dotnet5.2” – savvy?

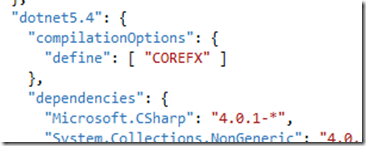

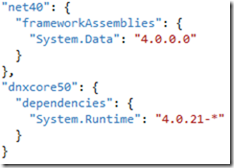

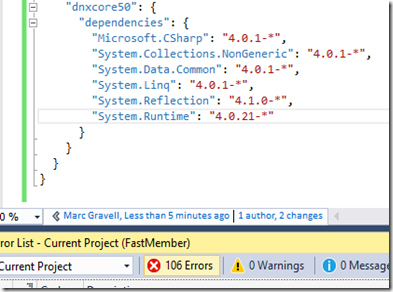

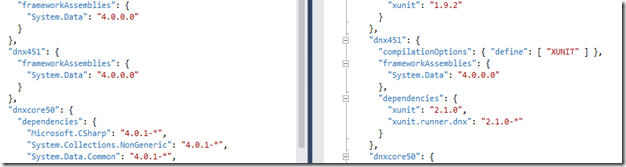

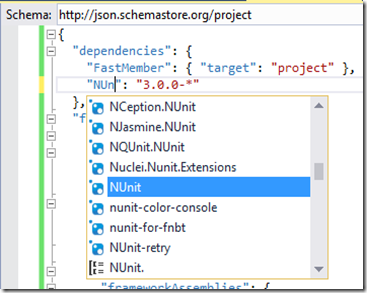

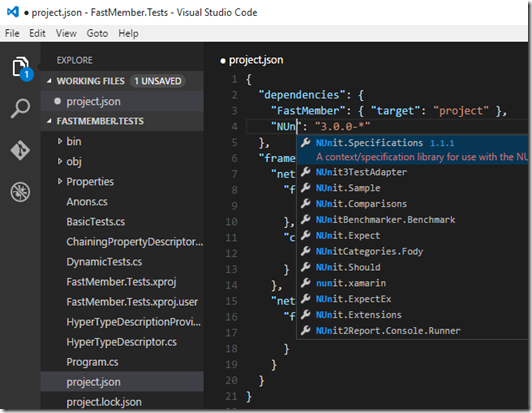

Great! So how do we do this? It is much easier than it sounds; we just change our project.json from “dnxcore50” to “dotnet5.4”:

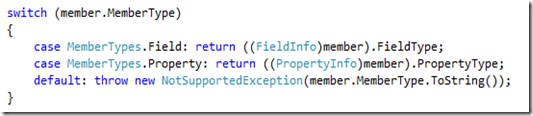

netstandard1.4 (dotnet5.5) is the richest variant – the intersection of DNX Core 5.0 and .NET Framework 4.6.*. We're going to target dotnet5.4. If you go backwards (1.3, 1.2, 1.1, etc) you can target a wider audience, but using a narrower intersection of available APIs. netstandard1.1, for example, includes Windows Phone 8.0. There are various tables on the .NET Platform Standard documentation that tell you what each name targets. Notice I added a “COREFX” define. That is because the compiler is now including “DOTNET5_4” as a build symbol, not the more specific “DNXCORE50”. To avoid confusion (especially since I know it will change in the next tools drop), I’ve changed my existing “#if DNXCORE50” to “#if COREFX”, for my convenience:

We don’t have to stop with netstandard1.3, though; what I suggest library authors do is:

- get it working on what they actively want to support

- then try wider (lower number) versions to see what they can support

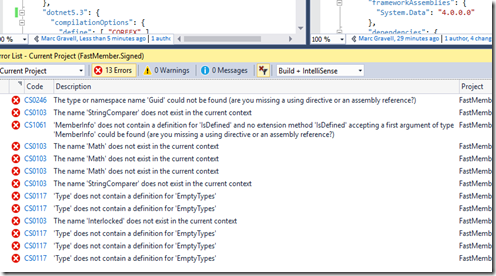

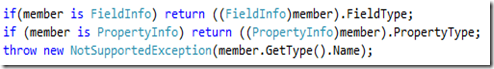

For example, changing to 1.2 (dotnet5.3) only gives me 13 errors, many of them duplicates and trivial to fix:

And interestingly, this is the same 13 errors that I get for 1.1 (dotnet5.2). If I try targeting dotnet5.1, I lose a lot of things that I absolutely depend on (TypeBuilder, etc), so perhaps draw the line at dotnet5.2; that is still a lot more potential users than 1.4. With some minimal changes, we can support dotnet5.2 (netstandard1.1); the surprising bits there are:

- the need to add a System.Threading dependency to get Monitor support (aka: the “lock” keyword)

- the need to explicitly specify the System.Runtime version in the test project

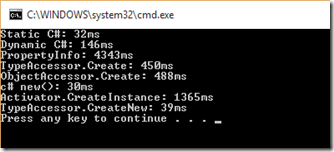

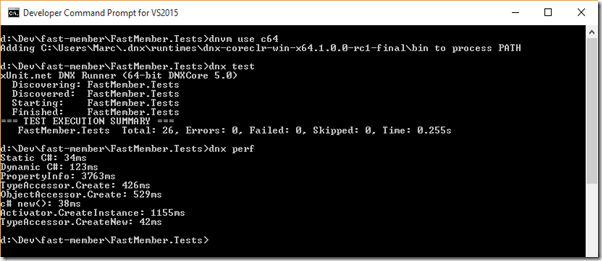

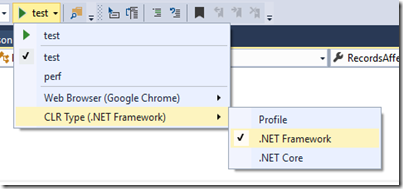

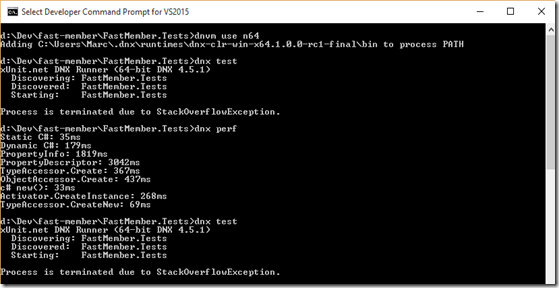

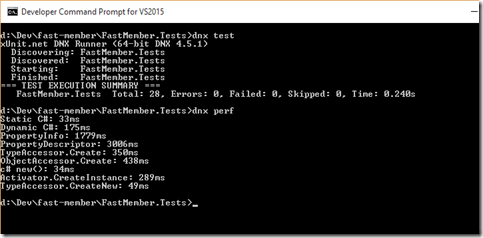

We can test this with “dnx test” / “dnx perf”, in both core-clr and .net under dnx, and it works fine. We don’t need the dnx451 specific build any more

Observations:

- I have seen issues with dotnet5.4 projects trying to consume libraries that expose dotnet5.2 builds; this might just be because of the in-progress tooling

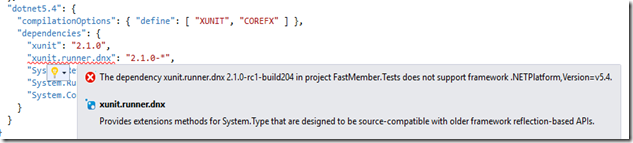

- At the moment, xunit targets dnxcore50, not dotnet*/netstandard* – so you’ll need to keep your test projects targeting dnxcore50 and dnx451 for now; however, your library code should be able to just target the .NET Platform Standard without dnx451 or dnxcore50:

That’s pretty much the key bits of netstandard; it lets you target a wider audience without having a myriad of individual frameworks defined. But you can use this in combination with more specific targets if you want to use specific features of a particular framework, when available.

Packaging

As this is aimed at library authors, I’m assuming you have previously deployed to nuget, so you should be familiar with the hoops you need to jump through, and the maintenance overhead. When you think about it, our project.json already defines quite a few of the key things nuget needs (dependencies, etc). The dnx tools, then, introduce a new way to package our libraries. What we need to do first is fill in some extra fields (copying from an existing nuspec, typically):

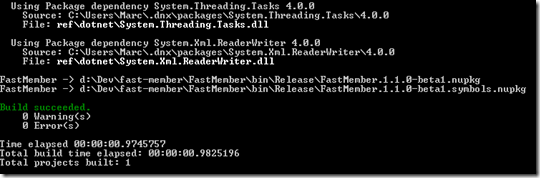

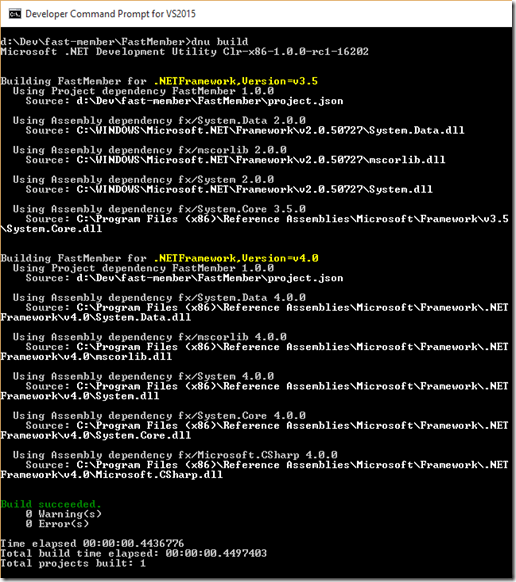

Now all we need to do is “dnu pack --configuration release”:

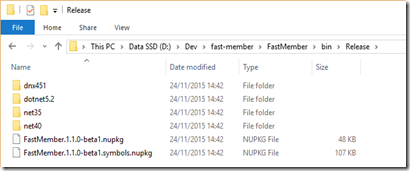

and … we’ve just built our nupkg (or two). Aside: does anyone else think “dnu pack” should default to release builds? Or is that just me? We can go in and see what it has created:

The nupkg is the packed contents, but we can also see what it was targeting. Looking at the above, it occurs that I should probably go back and remove the explicit dnx451 build, deferring to dotnet5.2, but… meh. It’ll all change again in rc2 ;p

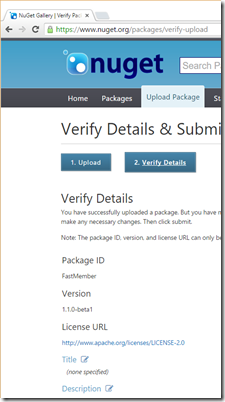

I wish there was a “dnu push” for uploading to nuget, but for now I’ll just use the manual upload:

The details are as expected, so: library uploaded! (and repeated for the strong-name version; don’t get me started on the “strong-name or don’t strong-name” debate; it makes me lose the will to live).

We have now built, tested, packaged and deployed our multi-targeting library that uses the .NET Platform Standard 1.1 and above, plus .Net 3.5 and .Net 4.0. Hoorah for us!

I should also note that Visual Studio also offers the ability to create packages; this is hidden in the project properties (this is manipulating the xproj file):

and if you build this way, the outputs go into “artifacts” under the solution (not the project):

Either way: we have our nupkg, ready to distribute.

Common Problems

The feature I want isn’t available in core-clr

First, search dotnet/corefx; it is, of course, entirely possible that it isn’t supported, especially if you are doing WPF over WCF, or something obscure like … DataTable ;p Hopefully you find it tucked away in a different package; lots of things move.

The feature is there on github, but not on nuget

One thing you could do here is to try using the experimental feed. You can control your package feeds using NuGet.config in your solution folder, like in this example, which disregards whatever package feeds are defined globally, and uses the experimental feed and official nuget feed. You may need to explicitly specify a full release number (including the beta marker) if it is pre-release. If that still doesn’t work, you could perhaps enquire with the corefx team on why/when.

The third party package I want doesn’t support core-clr

If it is open source, you could always throw them a pull-request with the changes. That depends on a lot of factors, obviously. Best practice would be to communicate with the project owner first, and check for branches. If it is available in source but not on NuGet, you could build it locally, and (using the same trick as above) add a local package source – all you need to do is drop the nupkg in a folder on the file-system, and add the folder to the NuGet.config file. When the actual package gets released, remember to nuke any temporary packages from %USERPROFILE%/.dnx/packages.

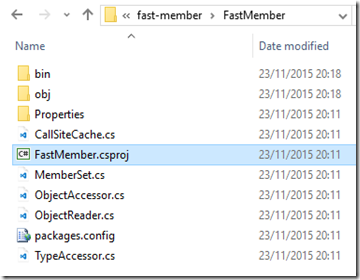

I don’t like having the csproj as well as the project.json

Long term, we can probably nuke those csproj; they are handy to keep for now, though, to make it easy for people to build the solution (minus core-clr support).

The feature I want isn’t available in my target framework / Platform Standard

Sometimes, you’ll be able to work around it. Sometimes you’ll have to restrict what you can support to more forgiving configurations. However, sometimes there are cheeky workarounds. For example, RegexOptions.Compiled is not available on a lot of Platform Standard configurations, but it is there really. You can cheat by checking if the enum is defined at runtime, and use it when available; here’s a nice example of that. There are uglier things you can do, too, such as using reflection to see if types and methods are actually available, even if they aren’t there in the declared API – you should try to minimize these things. As an example, protobuf-net would really like to use FormatterServices.GetUninitializedObject() when it is available. Just… be careful. This trick work on things like universal applications, but then: neither will hardly any of what protobuf-net does, so that is a moot point.

I’m having a problem with the tooling

The various teams are very open to feedback. I confess that I sometimes struggle to know what should go to the corefx team vs the asp.net team (some of the boundaries are largely arbitrary and historical), but it’ll probably find a receptive ear.

Conclusion

The core-clr project moves a lot of pieces, and a lot of things are still in flux. But: it is now stable enough that many library authors should be more than capable of porting their projects, and quite possibly simplifying their build process at the same time.

Happy coding.

![IMG_20150226_094001[1] IMG_20150226_094001[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjq99jt8jbpKM1n8gbOxwb20vM7ycrhn2IbEn6iaInknW4L2_tW5ghCKVCpCin8i_O834Y5PxrS3qtabcPbBRDaxTzjETeGJGhZFbb7VmkZhzrhdV8jPqj3Jqfg3zTHvb6DSXw-TwDp8N0z/?imgmax=800)

![IMG_20150226_093936[1] IMG_20150226_093936[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgflLpW7rFJoB7qPdsk35MMmM9rfh87xBYE4LJbWHRQ5VNCykEZ1YRTtMRZ8QZamSTDL9Tm8ZvYoS9fniCcv6q-Yhgye7VJiWFEB42OOP0aqS20ay9JilR0xPgxAtAyla29aAig5fYoHcDs/?imgmax=800)

![IMG_20150226_100233[1] IMG_20150226_100233[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEidouqZDLE9CRJHF5Bqt_wvbn2XY3mfH6_4oIFK3QpuHh8rtDdioy6pE_8MPWTykXIG3Q_a3uIzsQkKQg61KI0WkUJ3ueG5nxyERmhe9fr4GeSOuDPA6sQPP9PJR2PyCClAJRcIsgEQyIOP/?imgmax=800)