Introduction

I want to take a few blog posts to discuss some ramblings on performance, reflection, and library code. The common “knowledge” is that reflection is slow and to be avoided, but this is missing the caveat “when used inappropriately” – indeed, as part of library / framework code it can be incredibly useful and very fast. Just as importantly, suitable use of reflection can also avoid the risk of introducing bugs by having to write repetitive code manually.

Between my musings on the Expression API, work on HyperDescriptor, and many questions on sites like stackoverflow, I’ve looks at this issue often. More, I need to do some work to address the “generics” issue in protobuf-net – so I thought I’d try to combine the this with some hopefully useful coverage of the issues.

The setup

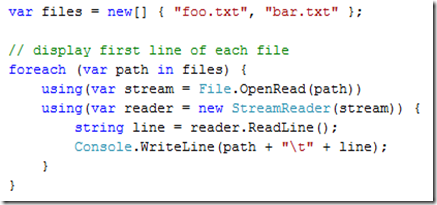

What I am really after is the fastest way of getting values into and out-of objects, optimised as far as is practical. Essentially:

var oldX = obj.X; // get

obj.X = newX; // set

but for use in library code where “X” (and the type of X) isn’t known at compile-time, such as materialization, data-import/export, serialization, etc. Thanks to my learning points from protobuf-net, I’m deliberately not getting into any “generics” answers from this. Been there; still trying to dig my way out.

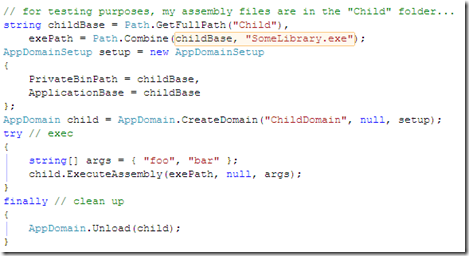

I plan to look at a range of issues in this area, including (not necessarily in this order):

- types of abstraction

- fields vs properties vs polymorphism

- mechanisms for implementing the actual get/set code

- boxing, casting and Nullable-of-T

- lookup performance

- caching of dynamic implementations

- comparison to MemberInfo / PropertyDescriptor / CreateDelegate

Note that this is an area that can depend on a lot of factors; for my tests I’ll be focusing on Intel x86 performance in .NET 3.5 SP1. I’ll try to update when 4.0 is RTM, but I’d rather not get too distracted by the 4.0 CTPs for now. Even with this fairly tight scope, I expect this to take too many words… sorry for that.

First problem – abstraction

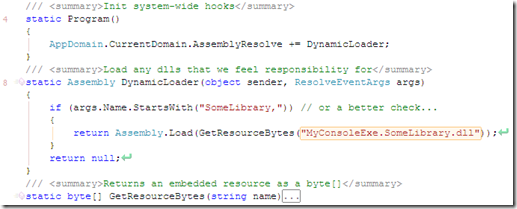

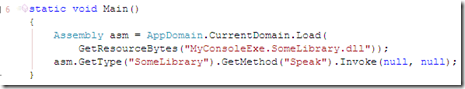

OK; so essentially we expect to end up with some API similar to reflection, allowing us to swap into existing code without re-writing it:

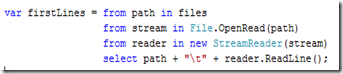

var prop = SomeAPI["X"]; // perhaps passing in

// obj or a Type

object oldX = prop.GetValue(obj); // get

prop.SetValue(obj, newX); // set

This should look very similar to anyone who has used reflection or TypeDescriptor. And I plan to compare/contrast to the default implementations. So: is there any noticeable difference in how we implement “prop”? This might give us a better solution, and it also impacts how I write the tests for the other issues ;-p I can think of 3 options off the top of my head:

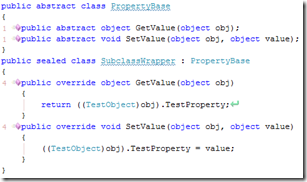

- An interface with concrete implementation per type/property

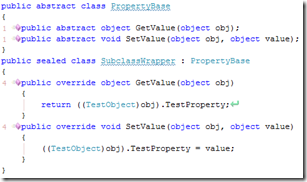

- An abstract base class with concrete implementations per type/property

- A sealed implementation with delegates (of fixed delegate types, one matching the get, one the set) passed into the constructor

There is no point introducing any dynamic code at this point, so I’ll test each these implemented manually (deliberately avoiding boxing), compared to a direct wrapper (no abstraction), and direct access (no wrapper, as per the original introduction):

I’ll post the full code later (and add link here), but this gives the initial results:

| Implementation | Get performance | Set performance | |

- No wrapper (baseline)

- No abstraction

- Base class

- Interface

- Delegates

|  |  |

(numbers scaled, based on 750M iterations; lower is better)

The conclusion of this is that, for the purposes of representing a basic property wrapper mechanism a base class is just as good as a wrapper with no abstraction – so we should assume a base-class implementation. An interface is a little slower – not enough to be hugely significant, but a property wrapper is a pretty specific thing, and I can’t see the benefit in making it more abstract than a base class.

For the purpose of adding context, the (very basic) code I’ve used to test this base-class approach is simply:

SummaryIt might not seem like we’ve come far yet; but I’ve described the problem, and narrowed the discussion (for the purpose of micro-optimisation) to implementations using a base-class approach. Which is a little annoying on one level, as the delegate approach (via DynamicMethod) is in many ways more convenient.

![images[1] images[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEguYOeF4pDIulMFUE85VoJnOpHhXLYCwwY7FC4nQJ75mrhJVZd9BOi8Pdhtp70C1pTkUNjSNtmlEzc0i8mIe8Q0fyV_zOyD41ZOajxR_4MgA-rh62POTI2mGM-C7WSOEYxtv372895MGzLD/?imgmax=800)