In part 1 I gave a brief introduction to the core-clr project and the key tools involved, from the perspective of a library author with existing .net libraries that they want to migrate to core-clr. I took a sample project (FastMember), and made some tooling changes to take it from a csproj-based build (targeting .net 3.5 and .net 4.0), to a build using project.json (again, targeting .net 3.5 and .net 4.0). Because the core-clr tools are not yet stable (rtm) or mainstream, I have retained the ability to build everything from the csproj – so that any arbitrary developer who clones the repo can build right away without having to install a pile of unfamiliar, unreleased tools.

In part 2, we start exploring what we can now do with the new tools.

Target Platforms

Here we’re going to look at adding a new core-clr build, and making the necessary code changes to make it compile.

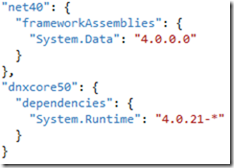

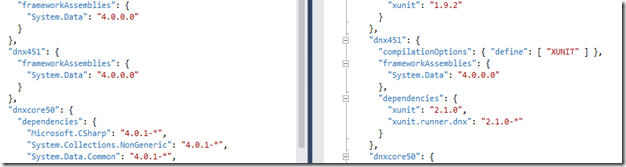

The first thing we probably want to do is start playing with core-clr. At current (rc1), in terms of build tools this is “dnxcore50”. Our framework dependencies change from being “frameworkAssemblies” to “dependencies”: we’re now going to be pulling down each set of libraries from nuget separately (cached in %USERPROFILE%/.dnx/packages), rather than a monolithic platform install. All we need to do to start, then, is add a “dnxcore50” token into our project.json (for those who have done a lot of core-clr work: we’ll be changing this later, don’t worry), including a dependency on “System.Runtime” (the version number was chosen with the help of auto-complete, so I didn’t need to go out of the editor to look this up):

Since we’ve changed our dependencies, we need to use “dnu restore”. This looks at what project.json requires, and compares it to project.project.lock.json which tracks what has been resolved already. If anything is missing, it looks in .dnx/packages, and if they are missing it uses our defined package sources to go and get them. This works identically for framework dependencies and 3rd-party libraries – it makes the entire thing painless.

Having added “dnxcore50” and the “System.Runtime” dependency, we can try to build – although we’re not actually expecting it to compile yet. In fact, “dnu build” reports 270 errors, and Visual Studio lights up like the Blackpool illuminations:

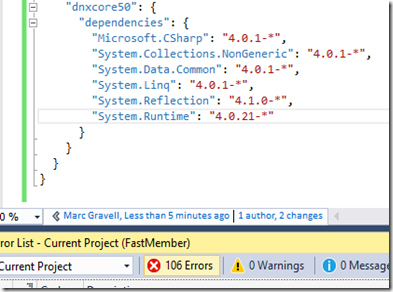

At this point, we have a small job to do of going through the errors and figuring out what packages we are missing. Granular framework packages makes deployment more convenient (and hopefully more frequent), but means we need a few more dependencies.

Let’s look at the second one – Hashtable; one way of resolving this is to play auto-complete pot-luck by guessing that this is probably in System.Collections.Something:

Since Hashtable is non-generic, it is probably in System.Collections.NonGeneric; we can add this and that error goes away:

We can go through a few obvious ones this way, getting rid of almost two-thirds of the fail:

I still have errors relating to:

- TypeBuilder / MethodBuilder / ILGenerator etc (the library does metaprogramming)

- members of Type / MemberInfo: IsValueType, IsDefined, MemberType (the library does a lot of reflection)

- IDataReader and DataTable

Which – broadly – covers some of the more subtle hurdles you need to jump.

finding rogue types

(edited: this tool does exactly this! - thanks for pointing that out, TIL).

It isn’t necessarily obvious where to find something like ILGenerator, especially since we’re included System.Reflection. There may be better tools, but my usual go-to place is the dotnet/corefx repo; by searching for “class ILGenerator” you can usually quickly determine whether something exists. Often you’ll hit the actual definition first time, which tells you the package in the name. In this case we get the tests, which is probably enough:

but if you’re still stuck, you can click into the test, then go back a few levels until you find the test’s project.json, and look at what it referenced:

So I’m probably going to want System.Reflection.Emit and System.Reflection.Emit.ILGeneration.

working with refactored APIs

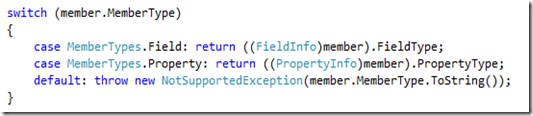

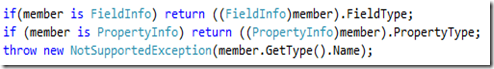

You’ll find a lot of places where the API available to you has been changed. For example, if you work with reflection – everything changes; System.Type ceases (or rather: ceased, quite a long time ago on a lot of frameworks) being the rich “I know everything” type. The idea is that Type is a lightweight token that allows you to identify and compare types, and if you want to know more you use System.TypeInfo; there are methods to translate between the two (GetTypeInfo() and AsType(), respectively). Likewise, MemberTypes no longer exists. As far as I know, there is no single master list of these changes – you just kinda need to tease each one separately.

Some changes you can make in a way that works satisfactorily on all frameworks; for example, rather than doing a switch on MemberType:

we can use some combination of “is”/”as”/cast:

In some places, there are extension methods in utility libraries you can use to bridge the gap; for example, to add a lot of familiar methods back onto Type, you can add a dependency on “System.Reflection.TypeExtensions”, and ensure that you have “using System.Reflection;” in the code-file (because these are extension methods added by the System.Reflection.TypeExtensions type)

In other places, like IsValueType, IsPublic, etc - there is no single common API we can use; it is fundamentally different (and “extension properties” aren’t a thing). The good news is that the project.json build chain makes it easy to use #if sections to switch between different implementations. The upper-case name of the target framework is automatically added as a build symbol - so we can check using “#if DNXCORE50”. If the impacted API is only used in one place, you can just use #if in-situ, but for frequently recurring things like IsValueType (which is often all over your code), I do not recommend polluting all your code with constant #if. Rather, my strategy is to create a utility class that bridges the gap (usually, but not always, via extension methods), and have just that class deal with different implementations.

The really nice thing is that the IDE helps us here: in the top left corner, it now tells us all the frameworks we are targeting, and we can switch between them in the context of a file – look in particular at which sections are greyed / colorized as we switch between frameworks:

Here’s the commit with everything compiling except System.Data, which I have just excised for now.

System.Data is a much more complicated story; while System.Data has been migrated, there are significant changes:

- DataTable doesn’t currently exist

- the interfaces no longer exist

I’m going to look at this System.Data reference in a bit more detail, but the takeaway here is not System.Data: it is how we can investigate problems.

The first is a big problem if you’re using DataTable; this is a controversial change (see the linked thread), but in reality it is often misused. There are times when it is genuinely the right tool, but for most scenarios you should really have moved to an ORM or micro-ORM approach by now (did I mention that Dapper is available for core-clr? – 1.50 and upwards). It is also (mis)used as part of the reader metadata API. Even if we don’t ever see DataTable, we will need (soon) a new API that has similar aims as GetSchemaTable(). This is all a bit of an aside, but the point I’m trying to emphasize is that : some APIs have irreconcilable differences. If your library depends on these features, you’re going to have some soul searching to do.

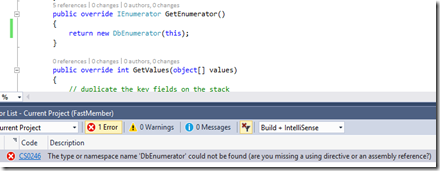

Ignoring the DataTable difference, we can still access much of the rest of System.Data; but we need to move from interfaces to abstract base types (DbDataReader instead of IDataReader). In this case, we have a little grunt work to do, but afterwards: we again have a single implementation with minimal #if. The one interesting bit is that DbEnumerator doesn’t seem to be in the current packaging:

It is dotnet/corefx in a branch, but not in “master”. This looks like some “work in progress” in the conversion, since that API is meant to talk in terms of IDataRecord (or DbDataRecord), and neither of those is supported in core-clr currently, so it isn’t clear to me what this enumerator is meant to do on core-clr! You will occasionally find pockets like this; to seek clarification, I could look at what SqlDataReader does, or I could ask the developers. Checking github, it looks like it uses a copied implementation in System.Data.SqlClient. And despite being declared “public”, this simply isn’t in the currently published assemblies. In this case, it all looks a bit of a mess, and it isn’t critical, so I’ll ask the developers, and throw an exception in that scenario for now. Here’s my eventual core-clr conversion of the System.Data-related code.

Testing Against Multiple Frameworks

Woohoo! We now have a project that compiles against .net 3.5, .net 4.0 and dnxcore50. That’s a great start, but we haven’t actually done anything except get it to compile yet. We want to run our tests, too! If you remember from part 1, FastMember has tests that use NUnit. I’m a pragmatist when it comes to test tools. I’ll be honest: at the current time, the easiest way to test on core-clr is via xunit. I’m sure the other tools will catch up, though.

Now, you’re probably thinking “but I don’t want to change all my tests”. I agree with you. Which is why I don’t do that. Instead: I cheat. We can make a final decision whether to migrate the tests more formally when everything is RTM. Right now, we just want things to work. What we’re going to do is:

- add a “dnxcore50” framework block to our test projects

- use xunit from the new framework

- add a bridge file (only active when targeting xunit) that shims between the two

- #if out any tests that won’t compile on core-clr due to missing features

With these changes, we can start moving to testing. A key piece here is learning about dnx commands; out project.json can actually declare multiple named commands (which map to assemblies in which to locate an entry-point) that dnx can then invoke. For xunit, the one we want is “xunit.runner.dnx”, but as it happens FastMember.Tests already declares a Main() entry-point that does some performance tests. As such, we can declare multiple commands:

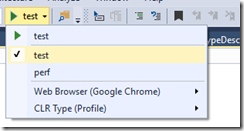

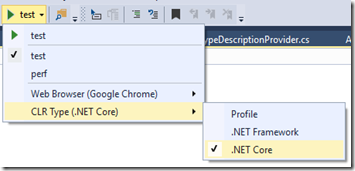

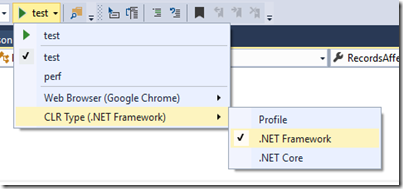

The IDE even updates to let us choose very conveniently what the “play” button should do:

If we’re going to use the IDE, we also need to make sure that we’re targeting .NET Core, since we haven’t enabled DNX tools for regular .NET yet (DNXCORE50 is .NET Core):

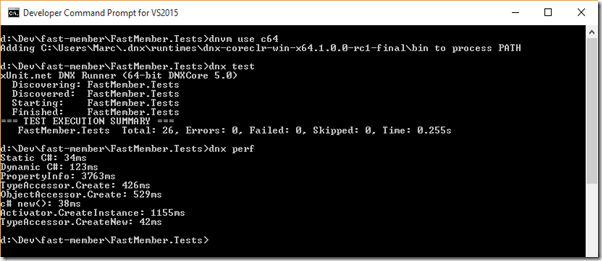

Finally, we can hit play, and amazingly our tests pass first time (this is the exception, not the rule):

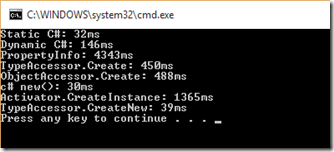

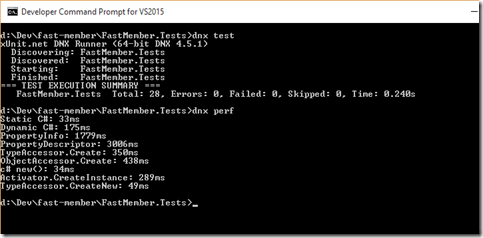

We can switch to the “perf” command and run that:

To do the same thing at the command-line; the really really important thing to remember is to switch framework to core-clr via dnvm (here, c64 is an alias for “rc1 64-bit core-clr on windows” that I created in part 1):

In the IDE, you can debug tests with breakpoints in the ways you would hope. The test tooling is “functional” right now, but is improving at a rate.

But what about regular .net?

This is where it starts getting fun! Remember that dnxcore50 is core-clr using the dnx tools. net40 and net35 are regular .net; the dnx tools don’t really do much other than compile them. But! The dnx tools themselves allow you to run .net apps! There is a different build we use for this: dnx451. This is .net 4.5.1 on dnx. Because this is consuming framework assemblies (not nuget feeds), it is basically the same configuration as net40 – except we can now use the up-to-date xunit bits (which support dnx451); we can add a new build to all of our projects in the project.json:

The IDE now lets us successfully run tests targeting the .NET Framework using the dnx tools:

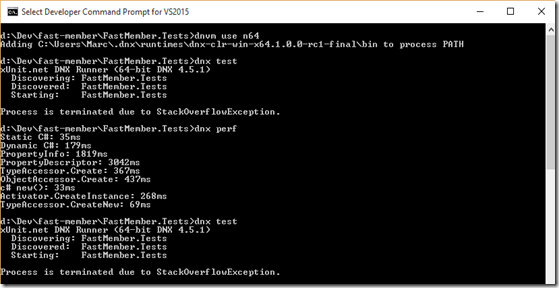

And again, we can use the command-line to run our tests from dnx, making sure to use dnvm to switch to the .NET Framework (I have an n64 alias for this):

Oops! I broke something, and it only impacts the .NET Framework version. This is easiest to diagnose in the IDE, where pressing F5 quickly tells me it is something to do with the DbDataReader.Dispose method:

Fair enough; that’s just my own brain-dead implementation. I fixed this, and another error (a boolean inversion, along with committing the dnx451 builds, here; Nick Craver is going to laugh at me for this…) that I had introduced, but: this shows the importance of testing any changes you make to your existing codebase! Our tests now pass for dnxcore50 and dnx451:

End of part 2

That got longer than I expected. We’ve now got as far as targeting dnxcore50 and dnx451 (alongside regular net35 and net40), running test suites, debugging, etc. We’ve actually seen something happen, and we’ve seen things go wrong.

Coming up in the unplanned part 3 (part 2 got too big):

- Targeting hell: what the hell is netstandard, and why should I care?

- Packaging and deployment

- Common problems