Updated: Billy referred me to the binary remoting format for DataTable; stats for BinaryFormatter now include both xml and binary.

This week, someone asked the fastest way to send a lot of data, only currently available in a DataTable (as xml), over the wire.

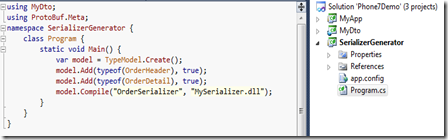

My immediate thought was simply GZIP the xml and have done with it, but I was intrigued… can we do better? In particular (just for a complete surprise) I was thinking whether protobuf-net could be used to write them. Now don’t get me wrong; I’m not a big supporter of DataTable, but they are still alive in the wild. And protobuf-net is a general-purpose serializer, so… why not try to be helpful, eh?

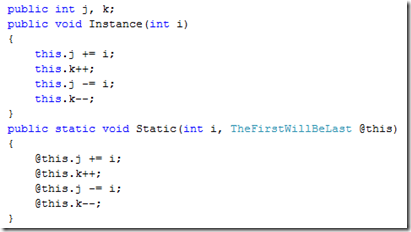

v1 won’t help at all with this, since the object-model just isn’t primed for extension, but in v2 we have many options. I thought I’d look at some typical data (SalesOrderDetail from AdventureWorks2008R2; 121317 rows and 11 columns), comparing the

At this point my new friend Lucy (right) is pestering me for a walk, but… it worked; purely experimental, but committed (r356). Results shown below:

Table loaded 11 cols 121317 rowsSince “walkies” beckons, I’ll be brief:

DataTable (xml) (vanilla) 2269ms/6039ms

64,150,771 bytes

DataTable (xml) (gzip) 4881ms/6714ms

7,136,821 bytes

DataTable (xml) (deflate) 4475ms/6351ms

7,136,803 bytes

BinaryFormatter (rf:xml) (vanilla) 3710ms/6623ms

74,969,470 bytes

BinaryFormatter (rf:xml) (gzip) 6879ms/8312ms

12,495,791 bytes

BinaryFormatter (rf:xml) (deflate) 5979ms/7472ms

12,495,773 bytes

BinaryFormatter (rf:binary) (vanilla) 2006ms/3366ms

11,272,592 bytes

BinaryFormatter (rf:binary) (gzip) 3332ms/4267ms

8,265,057 bytes

BinaryFormatter (rf:binary) (deflate) 3216ms/4130ms

8,265,039 bytes

protobuf-net v2 (vanilla) 316ms/773ms

8,708,846 bytes

protobuf-net v2 (gzip) 932ms/1096ms

4,797,856 bytes

protobuf-net v2 (deflate) 872ms/1050ms

4,797,838 bytes

- the inbuilt xml and BinaryFormatter (as xml) are both very large natively and compress pretty well, but with noticeable additional CPU costs

- with BinaryFormatter using the binary encoding it does much less work, getting (before compression) into the same size-bracket as the xml encoding managed with compression

- protobuf-net is much faster in terms of CPU, but slightly larger than the gzip xml output

- unusually for protobuf, it compresses quite will – presumably there is enough text in there to make it worthwhile; this then takes roughly half the size and much less CPU (compared to gzip xml)

Overall the current experimental code is exceptionally rough and ready, mainly as an investigation. But… should I pursue this? do people still care about DataTable enough? Or just use gzip/xml in this scenario?

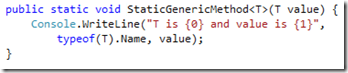

And of course as a fallback, I can still use runtime usage without “emit” – it is noticeably slower, but still works.

And of course as a fallback, I can still use runtime usage without “emit” – it is noticeably slower, but still works.

Obviously there is some pragmatic middle-ground in the middle where you need to design an app deliberately to allow performance, without stressing over every line of code, every stack-frame, etc.

Obviously there is some pragmatic middle-ground in the middle where you need to design an app deliberately to allow performance, without stressing over every line of code, every stack-frame, etc.